Dask

Technologies Big Data Master MIDS/MFA/LOGOIS

2025-01-17

Dask: Big picture

Bird-eye Big Picture

Dask in picture

Overview - dask’s place in the universe.

Delayed- the single-function way to parallelize general python code.Dataframe- parallelized operations on manypandasdataframesspread across your cluster

Flavours of (big) data

| Type | Typical size | Features | Tool |

|---|---|---|---|

| Small data | Few GigaBytes | Fits in RAM | Pandas |

| Medium data | Less than 2 Terabytes | Does not fit in RAM, fits on hard drive | Dask |

| Large data | Petabytes | Does not fit on hard drive | Spark |

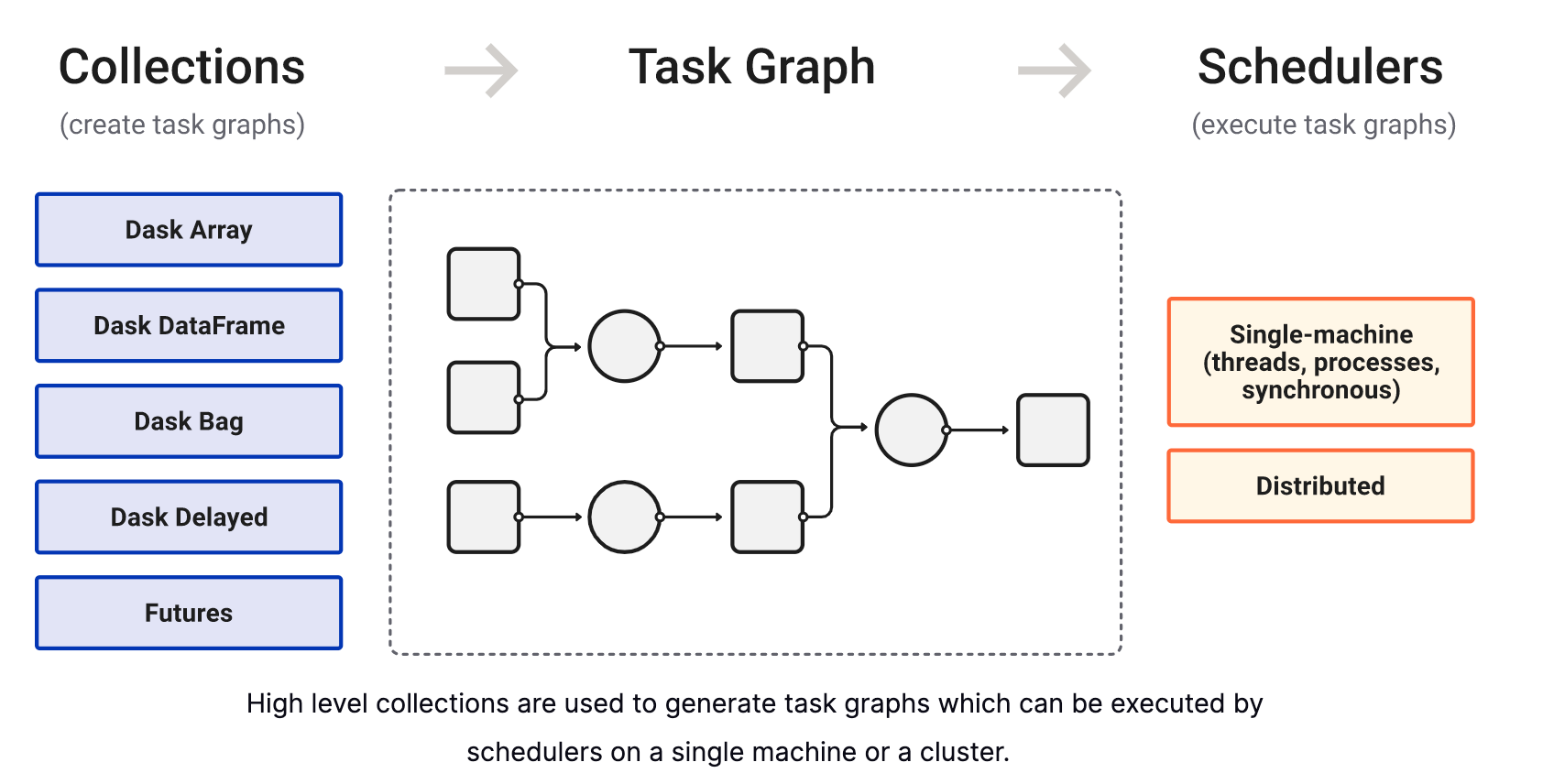

Dask provides multi-core and distributed parallel execution on larger-than-memory datasets

Dask provides high-level Array, Bag, and DataFrame collections that mimic NumPy, lists, and Pandas but can operate in parallel on datasets that do not fit into memory

Dask provides dynamic task schedulers that execute task graphs in parallel.

These schedulers/execution engines power the high-level collections but can also power custom, user-defined workloads

These schedulers are low-latency and work hard to run computations in a small memory footprint

Sources

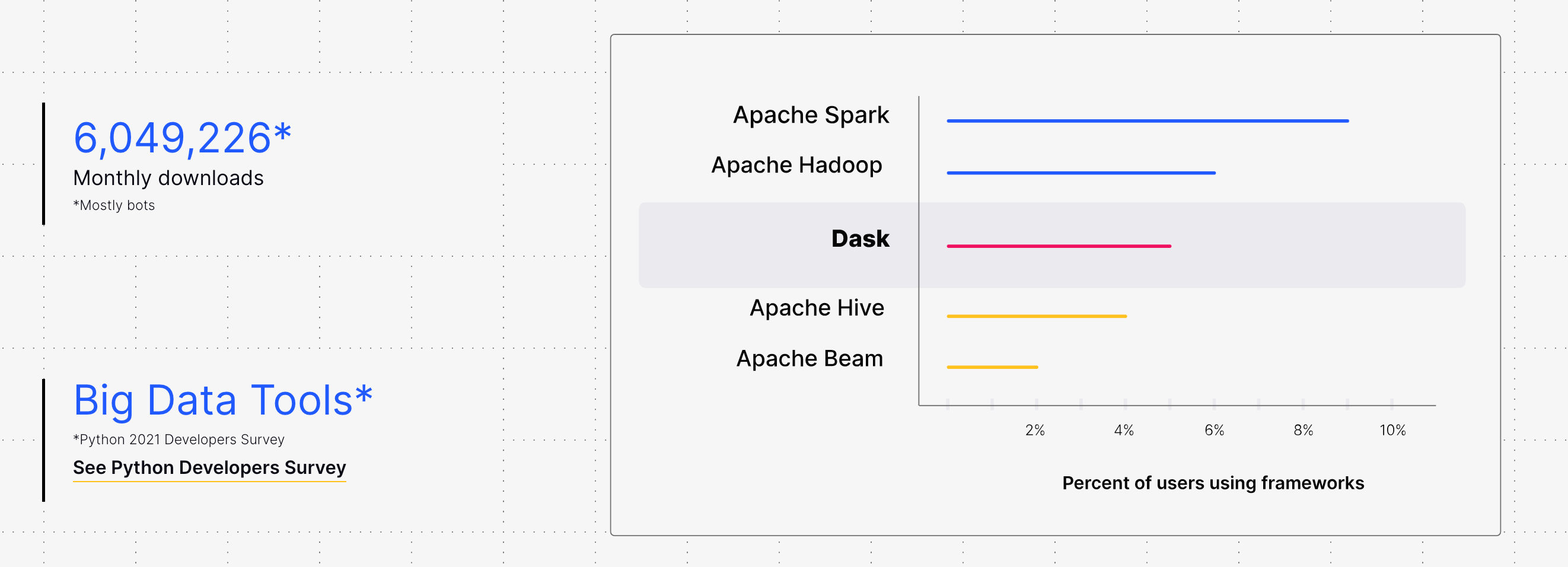

Trends

Dask adoption metrics

Delayed

Delayed (in a nutshell)

The single-function way to parallelize general python code

Imports

<dask.config.set at 0x7756d013e900>LocalCluster

Dask can set itself up easily in your Python session if you create a LocalCluster object, which sets everything up for you.

Normal Dask work …

Alternatively, you can skip this part, and Dask will operate within a thread pool contained entirely with your local process.

Delaying Pyhton tasks

A job (I)

A job (II): piecing elements together

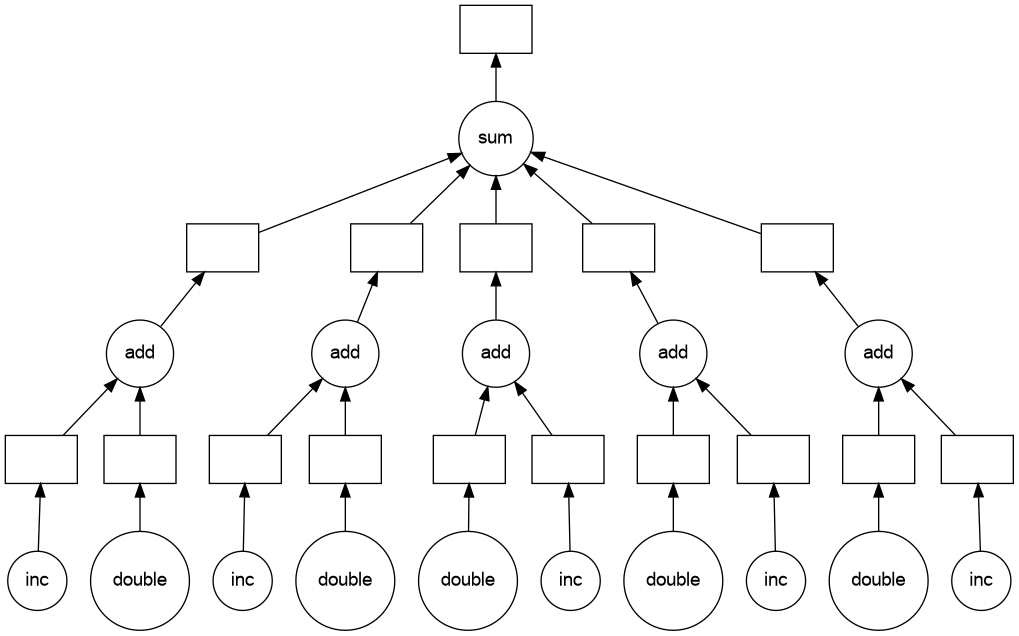

data = [1, 2, 3, 4, 5]

output = []

for x in data:

a = inc(x)

b = double(x)

c = add(a, b)

output.append(c)

total = sum(output)

total - 1

-

Increment

x - 2

-

Multiply

xby 2 - 3

-

c == (x+1) + 2*x == 3*x+1

50Delaying existing functions

output = []

for x in data:

a = dask.delayed(inc)(x)

b = dask.delayed(double)(x)

c = dask.delayed(add)(a, b)

output.append(c)

total = dask.delayed(sum)(output)

total- 1

-

Decorating

incusingdask.delayed() - 2

-

Decorating

sum()

Delayed('sum-b042864a-ca75-4d5d-9921-92cf16c401d2')50Another way of using decorators

@dask.delayed

def inc(x):

return x + 1

@dask.delayed

def double(x):

return x * 2

@dask.delayed

def add(x, y):

return x + y

data = [1, 2, 3, 4, 5]

output = []

for x in data:

a = inc(x)

b = double(x)

c = add(a, b)

output.append(c)

total = dask.delayed(sum)(output)

total

total.compute()- 1

- Decorating the definition

- 2

- Reusing the Python code

- 3

- Collecting results

50Visualizing the task graph

Tweaking the task graph

Another job

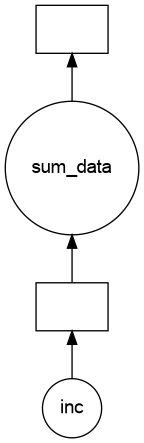

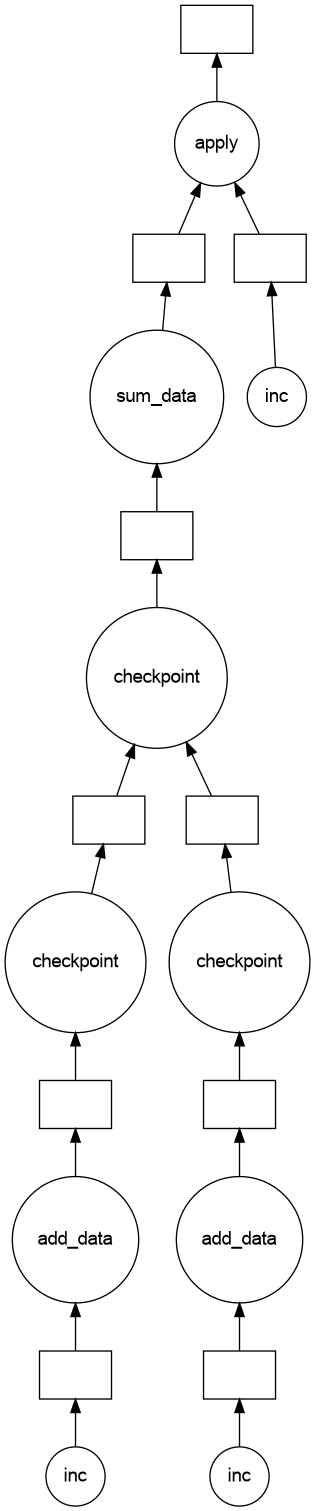

6A flawed task graph

Fixing

12The result of the evaluation of sum_data() depends not only on its argument, hence on the Delayed e, but also on the side effects of add_data(), that is on the Delayed b and d

Note that not only the DAG was wrong but the result obtained above was not the intended result.

By default, Dask

Delayeduses the threaded scheduler in order to avoid data transfer costs

Consider using multi-processing scheduler or dask.distributed scheduler on a local machine or on a cluster if your code does not release the

GILwell (computations that are dominated by pure Python code, or computations wrapping external code and holding onto it).

High level collections

Importing the usual suspects

import numpy as np

import pandas as pd

import dask.dataframe as dd

import dask.array as da

import dask.bag as db- 1

- Standard dataframes in Python

- 2

- Parallelized and distributed dataframes in Python

Bird-eye view

Dataframes

Dask Dataframes parallelize the popular

pandaslibrary, providing:

- Larger-than-memory execution for single machines, allowing you to process data that is larger than your available RAM

- Parallel execution for faster processing

- Distributed computation for terabyte-sized datasets

Dask Dataframes are similar to Apache Spark, but use the familiar

pandasAPI and memory model

One Dask dataframe is simply a coordinated collection of pandas dataframes on different computers

Dask DataFrame helps you process large tabular data by parallelizing Pandas, either on your laptop for larger-than-memory computing, or on a distributed cluster of computers.

Just

pandas: Dask DataFrames are a collection of manypandasDataFrames.

The API is the same1. The execution is the same

Large scale: Works on 100 GiB on a laptop, or 100 TiB on a cluster.

Easy to use: Pure Python, easy to set up and debug.

Dask DataFrames coordinate many pandas DataFrames/Series arranged along the index. A Dask DataFrame is partitioned row-wise, grouping rows by index value for efficiency. These pandas objects may live on disk or on other machines.

Creating a dask dataframe

- 1

- In Dask, proper partitioning is a key performance issue

- 2

- The dataframe API is (almost) the same as in Pandas!

| a | b | |

|---|---|---|

| 2021-09-01 00:00:00 | 0 | a |

| 2021-09-01 01:00:00 | 1 | b |

| 2021-09-01 02:00:00 | 2 | c |

| 2021-09-01 03:00:00 | 3 | a |

| 2021-09-01 04:00:00 | 4 | d |

Inside the dataframe

A sketch of the interplay between index and partitioning

(Timestamp('2021-09-01 00:00:00'),

Timestamp('2021-09-06 00:00:00'),

Timestamp('2021-09-11 00:00:00'),

Timestamp('2021-09-16 00:00:00'),

Timestamp('2021-09-21 00:00:00'),

Timestamp('2021-09-26 00:00:00'),

Timestamp('2021-10-01 00:00:00'),

Timestamp('2021-10-06 00:00:00'),

Timestamp('2021-10-11 00:00:00'),

Timestamp('2021-10-16 00:00:00'),

Timestamp('2021-10-21 00:00:00'),

Timestamp('2021-10-26 00:00:00'),

Timestamp('2021-10-31 00:00:00'),

Timestamp('2021-11-05 00:00:00'),

Timestamp('2021-11-10 00:00:00'),

Timestamp('2021-11-15 00:00:00'),

Timestamp('2021-11-20 00:00:00'),

Timestamp('2021-11-25 00:00:00'),

Timestamp('2021-11-30 00:00:00'),

Timestamp('2021-12-05 00:00:00'),

Timestamp('2021-12-09 23:00:00'))A dataframe has a task graph

TODO

What’s in a partition?

| a | b | |

|---|---|---|

| npartitions=1 | ||

| 2021-09-06 | int64 | string |

| 2021-09-11 | ... | ... |

Slicing

| a | b | |

|---|---|---|

| npartitions=2 | ||

| 2021-10-01 00:00:00.000000000 | int64 | string |

| 2021-10-06 00:00:00.000000000 | ... | ... |

| 2021-10-09 05:00:59.999999999 | ... | ... |

Dask dataframes (cont’d)

Dask DataFrames coordinate many Pandas DataFrames/Series arranged along an index.

We define a Dask DataFrame object with the following components:

- A Dask graph with a special set of keys designating partitions, such as (‘x’, 0), (‘x’, 1), …

- A name to identify which keys in the Dask graph refer to this DataFrame, such as ‘x’

- An empty Pandas object containing appropriate metadata (e.g. column names, dtypes, etc.)

- A sequence of partition boundaries along the index called divisions

Methods

Reading and writing from parquet

fname = 'fhvhv_tripdata_2022-11.parquet'

dpath = '../../../../Downloads/'

globpath = 'fhvhv_tripdata_20*-*.parquet'

!ls -l ../../../../Downloads/fhvhv_tripdata_20*-*.parquet'/home/boucheron/Documents'%%time

data = dd.read_parquet(

os.path.join(dpath, globpath),

categories= ['PULocationID',

'DOLocationID'],

engine='auto'

)Partitioning and saving to parquet

Schedulers

After you have generated a task graph, it is the scheduler’s job to execute it (see Scheduling).

By default, for the majority of Dask APIs, when you call

compute()on a Dask object, Dask uses the thread pool on your computer (a.k.a threaded scheduler) to run computations in parallel. This is true forDask Array,Dask DataFrame, andDask Delayed. The exception beingDask Bagwhich uses the multiprocessing scheduler by default.

If you want more control, use the

distributed schedulerinstead. Despite having “distributed” in it’s name, the distributed scheduler works well on both single and multiple machines. Think of it as the “advanced scheduler”.

Performance

Dask schedulers come with diagnostics to help you understand the performance characteristics of your computations

By using these diagnostics and with some thought, we can often identify the slow parts of troublesome computations

The single-machine and distributed schedulers come with different diagnostic tools

These tools are deeply integrated into each scheduler, so a tool designed for one will not transfer over to the other

Dask query optimization

Visualize task graphs

Single threaded scheduler and a normal Python profiler

Diagnostics for the single-machine scheduler

Diagnostics for the distributed scheduler and dashboard

Scale up/Scale out

References

Reference

Ask for help

dasktag on Stack Overflow, for usage questions- github issues for bug reports and feature requests

- gitter chat for general, non-bug, discussion

Books

- Scaling Python with Dask

- Data Science with Python and Dask

- [Dask Definitive Guide (to appear 2025)]

Blogs

Loading a Parquet file

| title | text | date | |

|---|---|---|---|

| npartitions=1 | |||

| int64 | int64 | int64 | |

| ... | ... | ... |

Dask Series Structure:

npartitions=1

float64

...

Dask Name: getitem, 4 expressions

Expr=((ReadParquetFSSpec(117185e)[['passenger_count', 'tip_amount']]).mean(observed=False, chunk_kwargs={'numeric_only': False}, aggregate_kwargs={'numeric_only': False}, _slice='tip_amount'))['tip_amount']Client

Client-23ac4916-e27e-11ef-a64c-300505fc3398

| Connection method: Cluster object | Cluster type: distributed.LocalCluster |

| Dashboard: http://127.0.0.1:8787/status |

Cluster Info

LocalCluster

62d0ccd4

| Dashboard: http://127.0.0.1:8787/status | Workers: 5 |

| Total threads: 20 | Total memory: 30.96 GiB |

| Status: running | Using processes: True |

Scheduler Info

Scheduler

Scheduler-4a341215-7814-4fde-a35c-f90f4492536c

| Comm: tcp://127.0.0.1:45181 | Workers: 5 |

| Dashboard: http://127.0.0.1:8787/status | Total threads: 20 |

| Started: Just now | Total memory: 30.96 GiB |

Workers

Worker: 0

| Comm: tcp://127.0.0.1:34133 | Total threads: 4 |

| Dashboard: http://127.0.0.1:39875/status | Memory: 6.19 GiB |

| Nanny: tcp://127.0.0.1:46805 | |

| Local directory: /tmp/dask-scratch-space/worker-31p6doxl | |

Worker: 1

| Comm: tcp://127.0.0.1:41389 | Total threads: 4 |

| Dashboard: http://127.0.0.1:37229/status | Memory: 6.19 GiB |

| Nanny: tcp://127.0.0.1:43601 | |

| Local directory: /tmp/dask-scratch-space/worker-m9q13h76 | |

Worker: 2

| Comm: tcp://127.0.0.1:34985 | Total threads: 4 |

| Dashboard: http://127.0.0.1:41965/status | Memory: 6.19 GiB |

| Nanny: tcp://127.0.0.1:34219 | |

| Local directory: /tmp/dask-scratch-space/worker-1yjf2_ei | |

Worker: 3

| Comm: tcp://127.0.0.1:35209 | Total threads: 4 |

| Dashboard: http://127.0.0.1:33913/status | Memory: 6.19 GiB |

| Nanny: tcp://127.0.0.1:42609 | |

| Local directory: /tmp/dask-scratch-space/worker-_sj1rvoz | |

Worker: 4

| Comm: tcp://127.0.0.1:42041 | Total threads: 4 |

| Dashboard: http://127.0.0.1:45571/status | Memory: 6.19 GiB |

| Nanny: tcp://127.0.0.1:35539 | |

| Local directory: /tmp/dask-scratch-space/worker-tgi7deul | |

IFEBY030 – Technos Big Data – M1 MIDS/MFA/LOGOS – UParis Cité