Python Data Science Stack

Technologies Big Data Master MIDS/MFA/LOGOIS

2026-01-08

What is Python ?

- born in 1990

- designed by Guido van Rossum (BDFL)

- multi-purpose

- easy to read

- easy to learn

- object-oriented

- strongly and dynamically typed

- cross-platform

Features of Python

Features of Python

- High-level data types (

tuples,dict,list,set, etc.) - Standard libraries with batteries included

- String services,

- Regular expressions

- Datetime

- …

- Libraries for scientific computing

- Easy and efficient I/O, many file formats

- OS, threading, multiprocessing

- Networking, email, html, webserver, scrapping

- Can be extended with

C/C++and easily accelerated (cython,numba,pypy) - Tons of external libraries

Features of Python

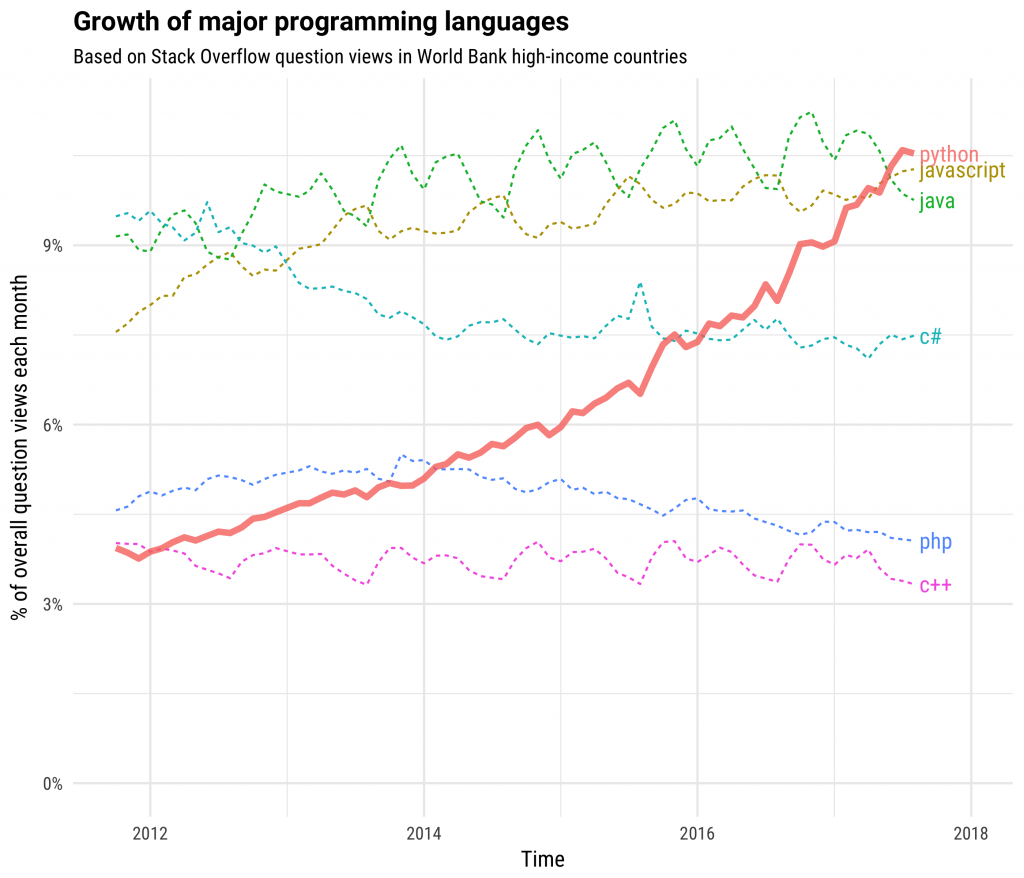

Trends

Monitoring trends in software developments

The stackoverflow 2025 survey

Python popularity growth

Why Python for data science ?

Besides these features, Python has:

- large communities for data science, analytics, etc.

- many, well-established, well-documented libraries

- demand from the industry

The Python Data Science Stack: Maths / Science

Numpy

-

numpyis all about multi-dimensional arrays and matrices - high-level computation such as

- linear algebra:

numpy.linalg - random number generation:

numpy.random

- linear algebra:

- Fast but not optimized for multi-threaded architectures

- Not for distributed multi-machine settings

Scipy

-

scipyextendsnumpywith extra modules:- optimization,

- integration,

- FFT, signal and image processing

- …

- Sparse matrix formats in

scipy.sparse

The Python Data Science Stack: Data processing

Pandas

-

pandasbuilds uponnumpyto provide a high-performance, easy-to-useDataFrameobject, with high-level data processing - Easy I/O with most data format :

csv,json,hdf5,feather,parquet, etc. -

SQLsemantics:select,filter,join,groupby,agg, ,where, etc. - Very large general-purpose library for data processing, not distributed, medium scale data only

Dask

-

daskis roughly a distributed and parallelpandas - Same API has

pandas! - Task scheduling, lazy evaluation, distributed dataframes

- Still young and far behind

spark, but can be useful - Easier than

spark, fullPython(noJVM)

Links

Pyspark

-

pysparkis thepythonAPI tospark, a big data processing framework - We will use it a lot in this course

- Native API to

sparkisscala:pysparkcan be slower (much slower if you are not careful)

SQLAlchemy

- Object Relational Model (ORM)

- ODBC

Pyarrow

The universal columnar format and multi-language toolbox for fast data interchange and in-memory analytics

Apache Arrow defines a language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware like CPUs and GPUs. The Arrow memory format also supports zero-copy reads for lightning-fast data access without serialization overhead.

The Python Data Science Stack: Data Visualization

Matplotlib

|

|

|

-

matplotlibprovides versatile 2D plotting capabilities- scientific computing

- data visualization

- Large and customizable library

- The historical one, somewhat low-level when plotting things related to data

Links

Plotly

|

|

|

- An interactive visualization library for web browsers based on

javascriptgraphic libraryd3.js

- With a clean and simple

pythoninterface, can be used in ajupyternotebook - Interactions enabled by default (zoom, etc.) and fast rendering

- Very good looking plots with good default parameters

Links

Altair

|

|

|

Vega-Altair: Declarative Visualization in Python

Vega-Altair is a declarative visualization library for Python. Its simple, friendly and consistent API, built on top of the powerful Vega-Lite grammar, empowers you to spend less time writing code and more time exploring your data.

The Python Data Science Stack: Dashboards

Dash

Links

Shiny

Links

Python Data Science Stack: environments

Pure Python interfaces

Ways to use all these tools

Write a script

script.pyand usepythondirectly in a CLI :python script.pyUse the

ipythoninteractive shell

Interfaces : Jupyter

- Use

jupyter: a web application that allows to create and run documents, called notebooks (with.ipynbextension) - Notebooks can contain code, equations, visualizations, text, etc. (literate programming)

- Each

notebookhas akernelrunning apython/R,Julia, … thread - A problem: a

ipynbfile is ajsondocument. Leads to bad code diff, a problem withgitversioning

Links

Quarto

Interfaces/IDE : VS Code (and other editors)

Interface/IDE : Positron

Python and R

Reticulate

Reticulate embeds a Python session within your R session, enabling seamless, high-performance interoperability. If you are an R developer that uses Python for some of your work or a member of data science team that uses both languages, reticulate can dramatically streamline your workflow!

Links

Py2R

Python has several well-written packages for statistics and data science, but CRAN, R’s central repository, contains thousands of packages implementing sophisticated statistical algorithms that have been field-tested over many years. Thanks to the

rpy2package, Pythonistas can take advantage of the great work already done by the R community.rpy2provides an interface that allows you to run R in Python processes. Users can move between languages and use the best of both programming languages.

But also…

Many libraries for statistics, machine learning and deep learning

Statistics

Machine learning

Deep learning

Getting faster

-

numba,cython,cupy

And …

PythonAPIs for most databases and cloudsProcessing and plotting tools for Geospatial data

Image processing

Web development, web scrapping

among many many many other things…

Thank you !

IFEBY030 – Technos Big Data – M1 MIDS/MFA/LOGOS – UParis Cité